A quick recap

This is a follow-up article to our previous Introduction to Coreference Resolution. We recommend it if you’re looking for a good theoretical background supported by examples. In turn, this article covers the most popular coreference resolution libraries, while showing their strengths and weaknesses.

Just to briefly recap – coreference resolution (CR) is a challenging Natural Language Processing (NLP) task. It aims to group together expressions that refer to the same real-world entity in order to acquire less ambiguous text. It’s useful in such tasks as text understanding, question answering, and summarization.

Coreference resolution by example

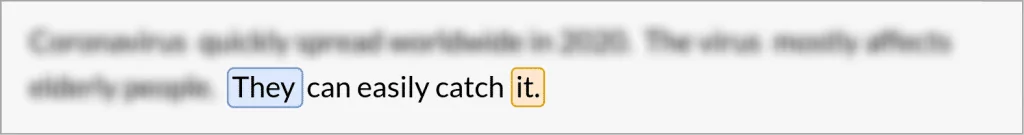

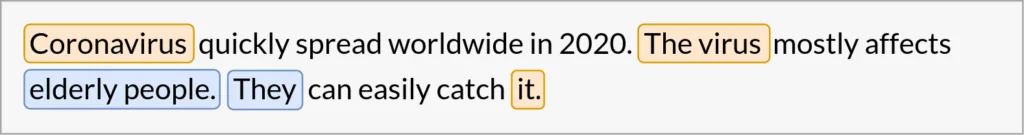

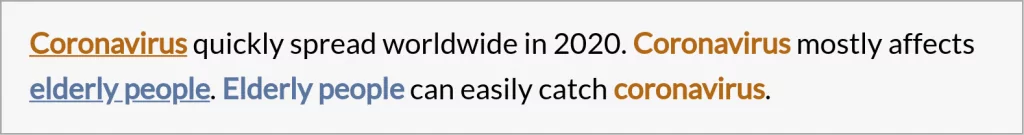

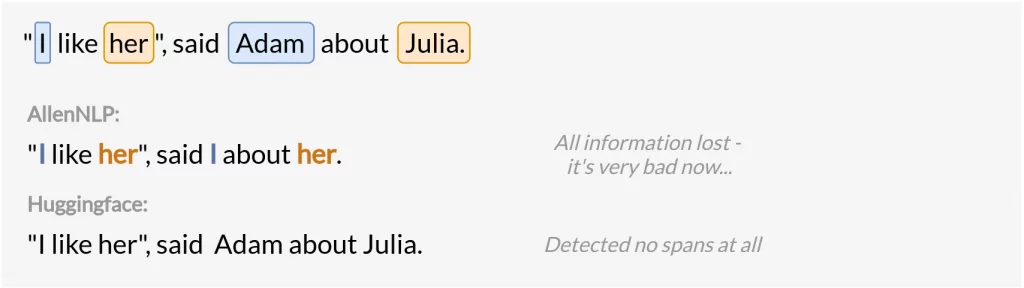

Through the use of coreference resolution, we want to achieve an unambiguous sentence – one that does not need any extra context to be understood. The expected result is shown in the following, simple example (whereas a detailed process of applying CR to the text is shown in the previous Introduction article):

Research motivation

In NLP systems, coreference resolution is usually only a part of the whole project. Like most people, we’ve also preferred to take advantage of the well tested and ready to use solutions that require only some fine-tuning without the need to write everything from scratch.

There are many valuable research papers concerning coreference resolution. However, not all of them have an implementation that is straightforward and simple to adopt. Our aim was to find a production-ready open-source library that could be incorporated into our project with ease.

Top libraries

There are many open-source projects about CR, but after comprehensive research on the current state-of-the-art solutions, by far, the two most prominent libraries are Huggingface NeuralCoref and AllenNLP.

Huggingface

Huggingface has quite a few projects concentrated on NLP. They are probably best known for their transformers library, which we also use in our AI Consulting Services projects.

We won’t go into detailed implementation but Huggingface’s NeuralCoref resolves coreferences using neural networks and is based on an excellent spaCy library, that anyone concerned with NLP should know by heart.

The library has an easily followable Readme that covers basic usage. But what we found to be the biggest strength of the library is that it allows simple access to the underlying spaCy structure and expands on it. spaCy parses sentences into Docs, Spans, and Tokens. Huggingface’s NeuralCoref adds to them further features, such as, if a given Span has any coreferences at all, or if a Token is in any clusters, etc.

What’s more, the library has multiple configurable parameters for example how greedily the algorithm should act. However, after a lot of testing, we identified the default parameters to work best in most cases.

import spacy

import neuralcoref

nlp = spacy.load('en_core_web_sm') # load the model

neuralcoref.add_to_pipe(nlp)

text = "Eva and Martha didn't want their friend Jenny to feel lonely so they invited her to the party."

doc = nlp(text) # get the spaCy Doc (composed of Tokens)

print(doc._.coref_clusters)

# Result: [Eva and Martha: [Eva and Martha, their, they], Jenny: [Jenny, her]]

print(doc._.coref_resolved)

# Result: "Eva and Martha didn't want Eva and Martha friend Jenny to feel lonely so Eva and Martha invited Jenny to the party."There is also a demo available that marks all meaningful spans and shows the network’s output – which mentions refer to which. It also gives information about the assigned score with how each mention-pair was similar.

The demo works nicely for short texts, but since the output is shown in a single line, if the query becomes too large it’s not easily readable.

Unfortunately, there is also a more significant problem. As of writing this, the demo works better than the implementation in code. We’ve tested many parameters and underlying models but we couldn’t achieve quite the same results as on the demo.

This is further confirmed by the community in multiple issues on their Github, with very vague and imprecise answers regarding how to obtain the same model as on the demo page – often coming down to “You have to experiment with different parameters and models, see what works best for you”.

AllenNLP

Allen Institute for Artificial Intelligence (or AI2 for short) is probably the most known research group in the field of natural language processing. They are inventors behind such models as ELMo. Their project, called AllenNLP, is an open-source library for building deep learning models for various NLP tasks.

It’s a huge library with many models built on top of PyTorch, one of them being a pre-trained coreference resolution model that we used, which is based on this paper.

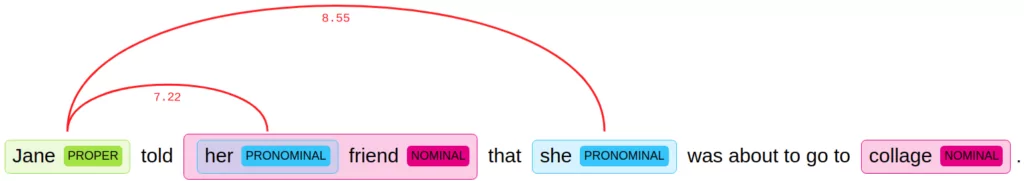

Likewise Huggingface NeuralCoref, AllenNLP also comes with a demo. It’s very clear and easy to understand, especially when it comes to the output. It’s structured in a multi-lined way, which allows for great readability. However, unlike Huggingface, the similarity details are obscured here and aren’t easily accessible even from code.

Yet, AllenNLP coreference resolution isn’t without its issues. When you first execute their Python code the results are very confusing and it’s hard to know what to make out of them.

from allennlp.predictors.predictor import Predictor

model_url = https://storage.googleapis.com/allennlp-public-models/coref-spanbert-large-2020.02.27.tar.gz

predictor = Predictor.from_path(model_url) # load the model

text = "Eva and Martha didn't want their friend Jenny to feel lonely so they invited her to the party."

prediction = predictor.predict(document=text) # get prediction

print(prediction['clusters']) # list of clusters (the indices of spaCy tokens)

# Result: [[[0, 2], [6, 6], [13, 13]], [[6, 8], [15, 15]]]

print(predictor.coref_resolved(text)) # resolved text

# Result: "Eva and Martha didn't want Eva and Martha's friend Jenny to feel lonely so Eva and Martha invited their friend Jenny to the party."AllenNLP tends to find better clusters, however, it often resolves them resulting in gibberish sentences.

Nevertheless, as we mention later, we have applied some techniques to tackle most problems with the library and its usage.

Detailed comparison

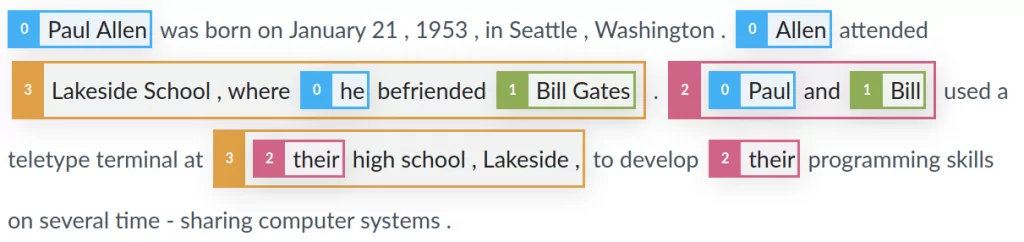

Just like libraries, there are many different datasets designed for coreference resolution. A few noble mentions are OntoNotes and PreCo dataset. But the one that best suited our needs and was licensed for commercial use was GAP dataset, which was developed by Google and published in 2018.

The dataset consists of almost 9000 labeled pairs of an ambiguous pronoun and an antecedent. Thanks to pairs having been sampled from Wikipedia they provide wide coverage of different challenges posed by real-world texts. The dataset is available to download on Github.

We’ve run several tests on the whole GAP dataset, but what really gave us the most was manually going through each pair and precisely analyzing the intermediary clusters as well as the obtained results.

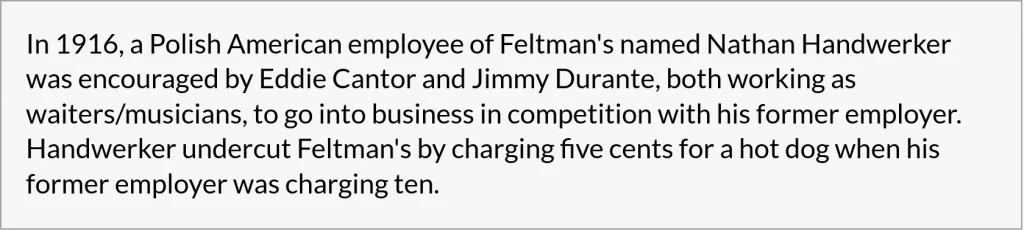

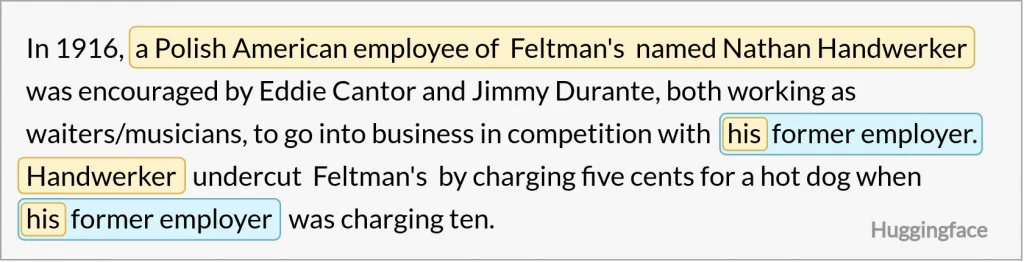

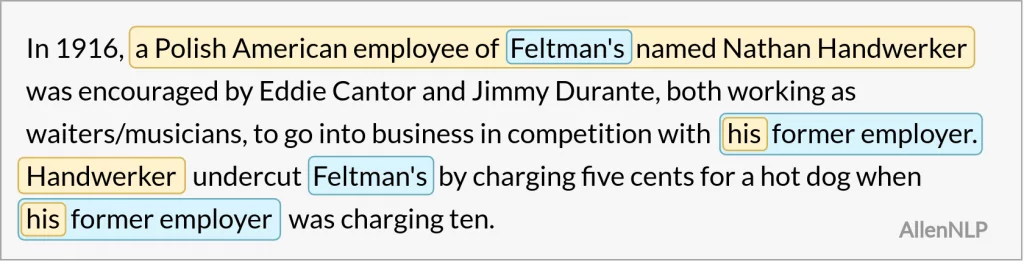

Below is one example from the dataset containing information about the history of hot dogs.

From now on we relate to the Huggingface NeuralCoref implementation as “Huggingface” and the implementation provided by Allen Institute as “AllenNLP”.

Most common CR problems

Very long spans

It’s hard to tell whether obtaining long spans is an advantage or not. On one hand, long spans capture the context and tell us more about the real-world entity we’re looking for. On the other hand, they often include too much information.

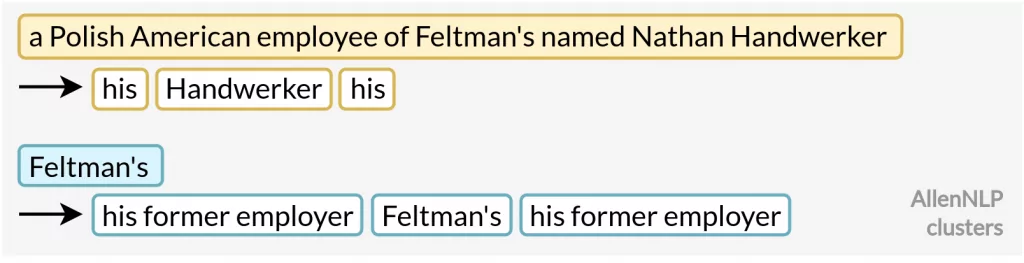

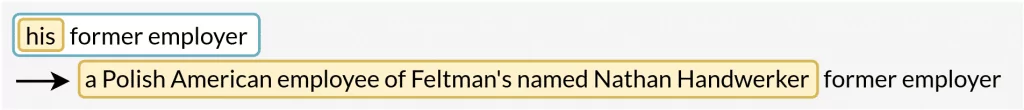

For example, the first AllenNLP cluster is represented by a very long mention: a Polish American employee of Feltman’s named Nathan Handwerker. We may not want to replace each pronoun with such extensive expression – especially in the case of nested spans:

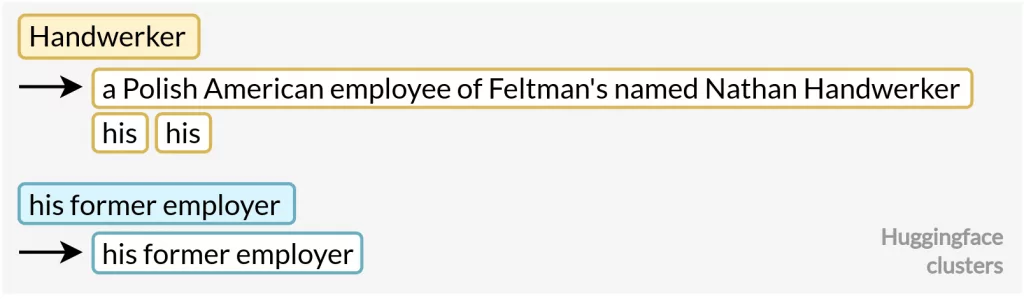

On the contrary, Huggingface will replace every mention in its first cluster only with the word Handwerker. In that case, we will lose the information about Handwerker’s name, nationality, and relationship with Feltman.

Nested coreferent mentions

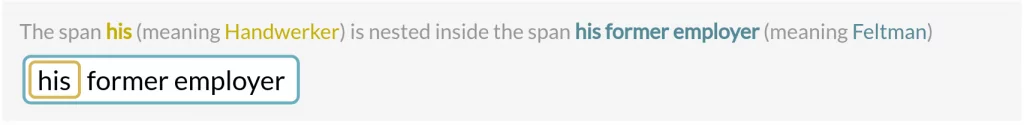

In the GAP example, we see nested spans – one (or more) mention being in the range of another:

Depending on the CR resolving strategy, mentions in the nested spans can be replaced or not but it all depends on one’s preferences – it’s often hard to say which approach suits data best. This can be seen in examples below where for each one a different strategy seems to be the most suitable:

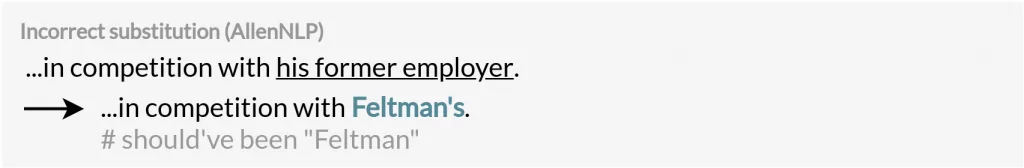

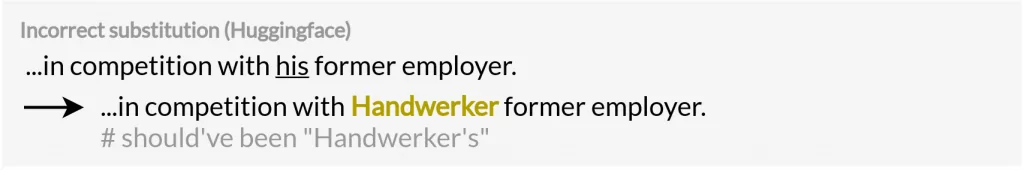

Incorrect grammatical forms

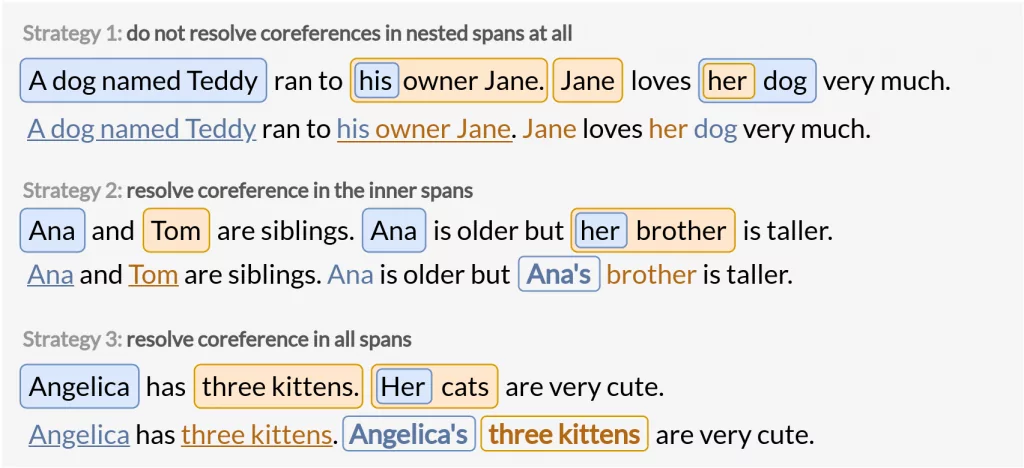

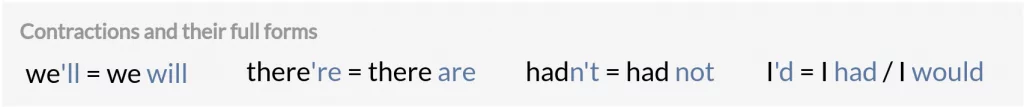

Contractions are just condensed expression forms usually obtained with the use of an apostrophe e.g.:

AllenNLP considers some contractions as a whole, replacing other mentions with the incorrect grammatical form:

In such cases, Huggingface avoids this problem by always taking the base form of a noun phrase. However, this might also lead to incorrect sentences:

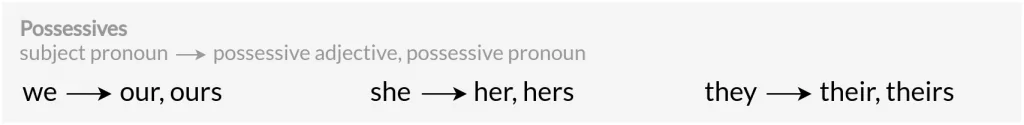

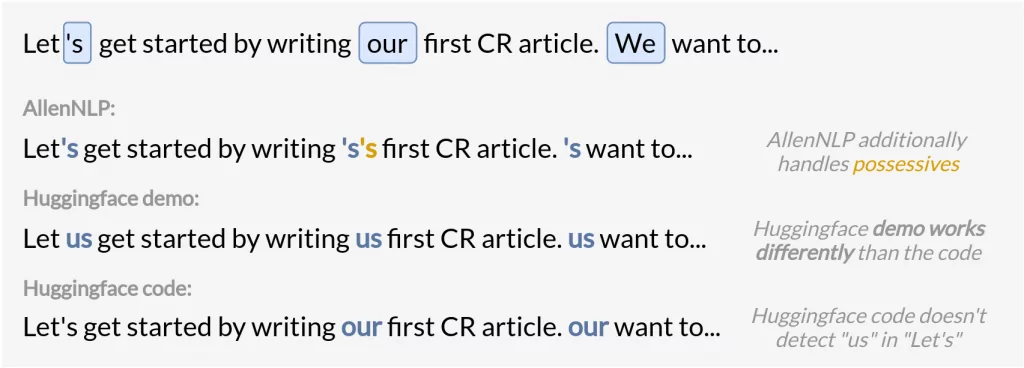

It happens because of possessive adjectives and pronouns occurrence – when a cluster is a composition of both subject and possessive ones.

This problem unfortunately concerns both libraries. However, AllenNLP detects a couple of POS (part-of-speech) tags and tries to handle this problem in certain cases (though not always obtaining the desired effect).

Finding redundant CR clusters

A needless cluster is for example the second Huggingface cluster in the discussed text fragment. Substituting his former employer with his former employer doesn’t provide any additional information. Similarly, when a cluster doesn’t contain any noun phrase or is composed only of pronouns – it’s with high probability needless. Those kinds of clusters can lead to grammatically incorrect sentences as shown in the example below.

Cataphora detection

We’ve previously comprehensively described the issue of anaphora and cataphora, the latter one being especially tricky as it is much harder to capture and often results in wrong mention substitutions.

Huggingface has problems with cataphora detection whereas AllenNLP always treats the first span in a cluster as a representative one.

Pros and cons

For convenience, we’ve also constructed a table of main advantages and drawbacks of both libraries, which we’ve discovered during our work with them.

| Huggingface | AllenNLP | |

| PROS | – demo provides valuable information – easy-to-use – compatible with spaCy | – very legible demo – detects possessives – detects cataphora |

| CONS | – demo works differently than the Python code 🙁 – doesn’t handle cataphora – often finds redundant clusters | – code not intuitive to use – often generates too long clusters – sometimes wrongly handles possessives – primitively resolves coreferences often resulting in grammatically incorrect sentences |

What we’ve also found interesting is that Huggingface usually locates fewer clusters and thus substitutes mentions less often. By contrast, AllenNLP seems to replace mention-pairs more “aggressively” on account of it finding more clusters.

Summary

In this article, we’ve discussed the most distinguished coreference resolution libraries, and our experience with them. We’ve also shown their advantages and pointed out the problems they come with.

In the next and last article in this series, we are going to present exactly how we’ve managed to make them work. We’ll show how to somewhat combine them into one solution, by taking what each does best and mostly negating their problems using the other one’s strength in that place.

If you’d like to work with any of these libraries we’ve also provided two more detailed notebooks that you can find on our NeuroSYS GitHub.

References

[1]: State-of-the-art neural coreference resolution for chatbots – Thomas Wolf (2017)

Project co-financed from European Union funds under the European Regional Development Funds as part of the Smart Growth Operational Programme.

Project implemented as part of the National Centre for Research and Development: Fast Track.