Everyone knows that humanity divides into those who do backups and those who will do backups. That’s just a story older than time – anything you do, whether it’s digital or paper documentation, should have a safely stored copy, just in case.

There’s another breakdown, as those who do, divide further into

- those who test backups

- those who apparently do not have backups

As for the second group, it all depends on assumptions and the choice of tools in use. What may have appeared a good idea to store data, can be easily exposed as insufficient in case a security breach occurs. Preserving resources from intrusions, equipment failures, and catastrophes is crucial to preventing data loss events.

How to prepare data for restoring once something goes wrong?

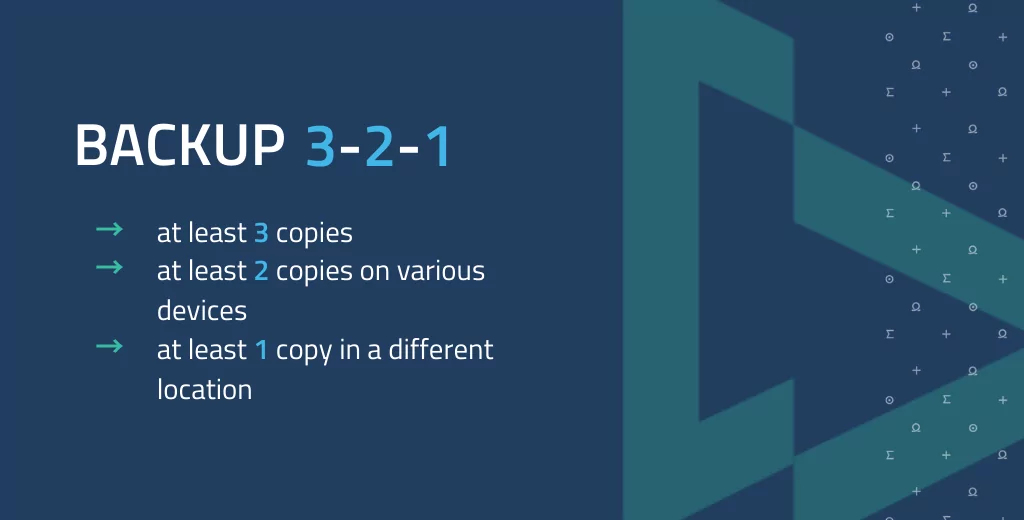

In the perfect world – a 3-2-1 backup

The 3-2-1 is a desirable, yet difficult to achieve approach, where data is thoroughly secured by various means.

The perfect setup

What storage types do we mean by various means?

- another drive in the computer

- an external USB drive

- an external drive in a different PC

- cloud drive

- a pen drive/CD/DD

- any other data carrier/storage device (e.g. tape drives used mostly by corporate entities, like AWS and their Glacier service)

Where can the backup storage be located?

- the same device (not great)

- the same building (ok, but could be better)

- another building (better)

- another country (in the case of servers and cloud. Good, but not ideal*)

- a portable, handheld device (could be great in case of an on-site accident, yet still unfavorable when lost or stolen)

*not ideal, as reminded by an extensive fire at the OVH location in France, which downed millions of websites in May 2021.

Storage is not the end of the story

Protecting data from malicious actions and other unfortunate events doesn’t end with keeping it out of the hands of unauthorized users.

Further steps entail:

- encrypting

- encrypting

- And just a little more, just to be sure – encrypting

(which applies to private, professional, business data of critical meaning and isn’t necessary in case of storing backup copies of e.g. legally purchased music, movies, games, etc.)

How to encrypt?

Full Disk Encryption

As the name suggests, it applies to encrypting whole discs. Covering with care the whole asset is the easiest way to protect the data it carries.

Windows

BitLocker – built-in

VeraCrypt

macOS

FileVault2

Linux

VeraCrypt

Dm-crypt (LUKS)

Selected files or catalogs

7-zip – a file archiver with a high compression ratio

Cryptomator – great for encrypting files in the cloud

GnuPG – recommended for encrypting communication

Cloud data storage

Maximum security thanks to end-to-end encryption

MEGA

Filen

While we vouch for the above solutions, when it comes to critical data, we still recommend further security measures. Tools like Restic and BorgBackup support backup and deduplication, which helps manage stored data. Backup Ninja and UrBackup help achieve not only safety, but also fast restoration time, easy restoration, and management of automated database backups.

How do we backup our code?

We use an AWS S3 bucket to store data, followed by at least 2 copies of each 2 recent backups stored on our infrastructure. Both the infrastructure and bucket resources are backuped daily. Once a month, we transfer the recent backup of the most critical assets including GitLab, Youtrack, Mattermost, and Bitwarden to an external drive.

The AWS S3 provides an object lock mechanism, which secures from deleting or overwriting stored backups.

The lock has two retention modes:

- Governance mode – users can alter resources if granted special permissions

- Compliance mode – a 100% protection from tampering with the stored data, securing it even from the root user of the AWS account

The tool requires setting retention periods during which set objects remain locked and under WORM protection. WORM stands for Write Once, Read Many, and is an element of storage where not changing stored data is critical.

Another useful mechanism provided by AWS is Bucket Versioning, allowing users to store multiple versions of a backuped resource in the bucket. As a result, each attempt to overwrite the backup results in creating (and storing) another version of such an item.

AWS S3 – storage class

Amazon S3 Standard-Infrequent Access

A standard way of storing data, paid by per set time period, irrespective of how long in fact data is preserved on AWS servers

Amazon S3 Glacier Deep Archive

A file archive with smaller costs, but with a minor inconvenience, as access to stored resources requires time (12 hours from request to access to desired backups)

AWS backups are automated using a file lifecycle

Once a day we upload a daily backup of every tool we use. This data is available right away for 30 days and after this period, it is labeled Expired and lands in the queue for deleting.

Every week we upload a collective backup to the Glacier Deep Archive. After 180 days, these copies are labeled Expired and deleted.

Each backup is marked with a relevant tag (Daily, Weekly) for easier management and is secured by an object log protecting it from deleting and overwriting.

Backup tests

Every 2-3 months, we test our safely stashed resources. Using a separate server and the Docker service we make sure our backups are valid and ready to restore data in case of necessity.

This step should give the final answer to the “do we really have backups” question.

Coming back to the initial question – to backup or not to backup?

There’s no other good answer than yes, you should backup. Securing data critical to executed operations is necessary to maintain continuity and avoid operational downtimes. Better safe than sorry, no matter how cliche it sounds.

Recovering from unplanned events is critical to maintaining operations, and as such, shouldn’t be overlooked in favor of seemingly easier, inconsiderate approaches. While backups may not seem to be the most exciting part of software development, their importance is impossible to overestimate.