In the previous article on Elasticsearch, we’ve laid out essential facts about the engine’s mechanism and its components. This time, we would like to share some ideas you may come across or want to experiment with to boost your search performance. For the purposes of this article, we’ve conducted some experiments to illustrate the concepts and provide you with a ready-to-use code. Furthermore, based on our previous research experiences in the NLP domain, we will also try to explain what we found most beneficial when optimizing a search engine.

Experiments setup

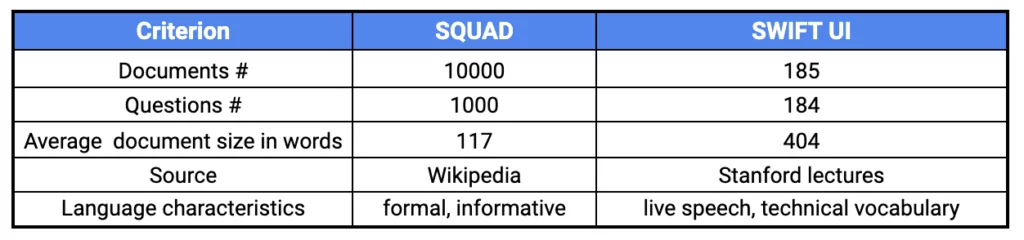

To demonstrate the optimization ideas better, we have prepared two Information Retrieval datasets.

- The first one is based on SQUAD 2.0 – a Question Answering benchmark that can be used for validation of the Information Retrieval (IR) as well, when adjusted properly. We’ve extracted 10.000 random documents and 1.000 of related questions. We treat each SQUAD paragraph as a separate index document.

It is worth mentioning that Elasticsearch is designed by default for a much larger collection (millions of documents). However, we found the limited SQUAD version faster to compute and well-generalizing.

- The second benchmark is our custom-prepared and much smaller. We used it to show how Elasticsearch behaves on smaller indices and how the same query types act differently on other datasets. The dataset is based on Stanford University lectures transcription of the SWIFT UI course, which can be found here. We’ve split the first seven lectures into 185 smaller ones, 25-sentenced documents with five sentences overlapping. We have also prepared 184 questions with multiple possible answers.

SQUAD paragraphs come from Wikipedia, so the text is concise and well written, and is not likely to contain errors. Meanwhile, the SWIFT UI benchmark consists of texts from recorded speech samples – it is more vivid, less concrete, but still grammatically correct. Moreover, it is rich in technical, software engineering-oriented vocabulary.

For validation of the Information Retrieval task, usually the MRR (mean reciprocal rank) or MAP (mean average precision) are used. We also use them on a daily basis; however, for the purpose of this article, to simplify the interpretation of outcomes, we have chosen the ones which are much more straightforward – the ratio of answered questions within top N hits: hits@10, hits@5, hits@3, hits@1. For implementation details see our NeuroSYS GitHub repository, where you can find other articles, and our MAGDA library.

Idea 1: Check the impact of analyzers on IR performance

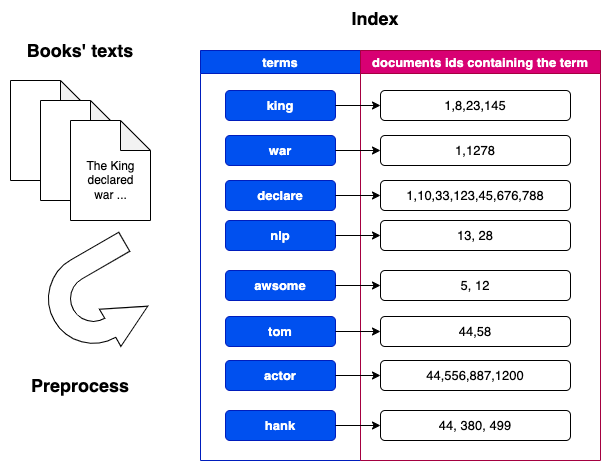

As described in the previous article, we can use a multitude of different analyzers to perform standard NLP preprocessing operations on indexing texts. As you can probably recall, analyzers are by and large a combination of tokenizers and filters and are used for storing terms in the index in an optimally searchable form. Hence, experimenting with filters and tokenizers should probably be the first step you should take towards optimizing your engine’s performance.

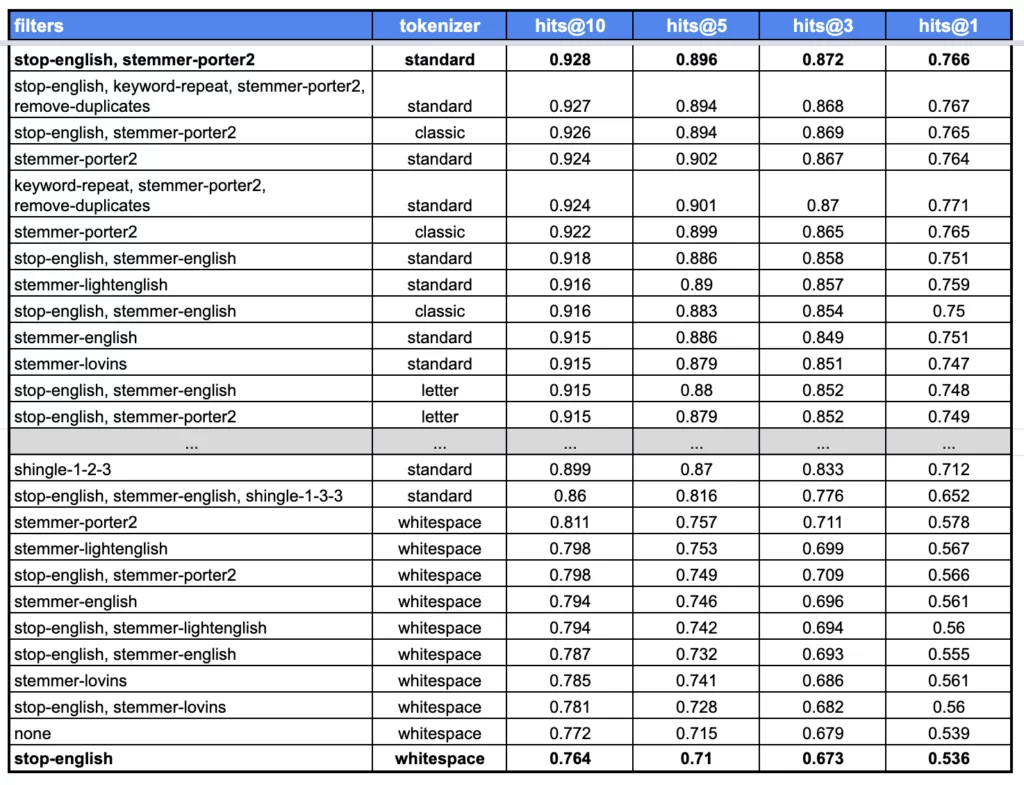

To confirm the above statement, we present validation results of applying different analyzers to the limited SQUAD documents. Depending on the operations performed, the effectiveness of the search varies significantly.

We provide the results of experiments carried out using around 50 analyzers on the limited SQUAD sorted by hits@10. The table is collapsed for readability purposes; however, feel free to take a look at the full results and code on our GitHub.

Based on our observations of multiple datasets, we present the following conclusions about analyzers, which, we hope, will be helpful during your optimization process. Please bear in mind that these tips may not apply to all language domains, but we still highly recommend trying them out by yourselves on your datasets. Here is what we came up with:

- stemming provides significant improvements,

- stopwords removal doesn’t improve performance too much,

- the standard tokenizer usually performs best, while the whitespace tokenizer does the worst,

- usage of shingles (word n-grams) does not provide much improvement, while char n-grams can even decrease the performance,

- simultaneous stemming while keeping the original words in the same field performs worse than bare stemming,

- Porter stemming outperforms Lovins algorithm.

It is also worth noting that the default standard analyzer, which consists of a standard tokenizer, lowercase, and stop-words filters, usually works quite well as it is. Nevertheless, we were frequently able to outperform it on multiple datasets by experimenting with other operations.

Idea 2: Dig deeper into the scoring mechanism

As we know, Elasticsearch uses Lucene indices for sharding, which works in favor of time efficiency, but can also give you a headache if you are not aware of it. One of the surprises is that Elasticsearch carries out score calculation separately for each shard. It might affect the search performance, if too many shards are used. In consequence, the results can turn out to be non-deterministic between indexations.

Inverse Document Frequency is an integral part of BM25 and is calculated for each term, while putting documents into separate buckets. Therefore, the search score may differ more for particular terms, the more shards we have.

Nevertheless, it is possible to force Elasticsearch to calculate the BM25 score for all shards together, treating them as if they were a single, big index. However, it affects the search time greatly. If you don’t care about the search time but about the consistency/reproducibility, consider using Distributed Frequency Search. It will sum up all BM25 factors, regardless of the number of shards.

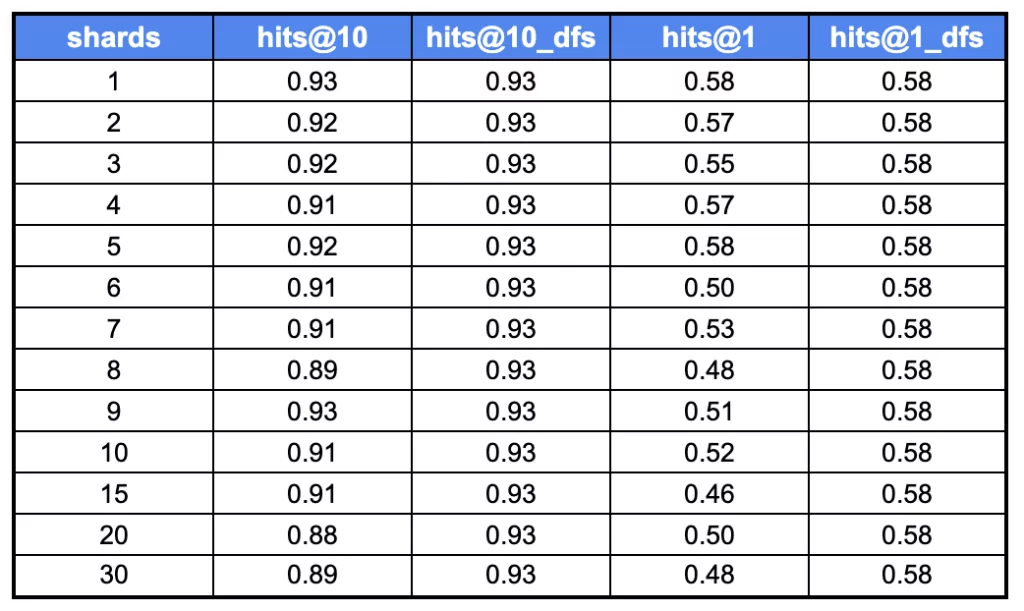

We have presented the accuracy of the Information Retrieval task in the below table. Note: It was our intention to focus on accuracy of the results and not on how fast we’ve managed to acquire them.

It can be clearly seen that the accuracy fluctuates when changing the number of shards. It can also be noted that the number of shards does not affect the scores when using DFS.

However, with a dataset large enough, the impact of shards will be less. The more documents in an index, the more IDF parts of BM25 become normalized throughout shards.

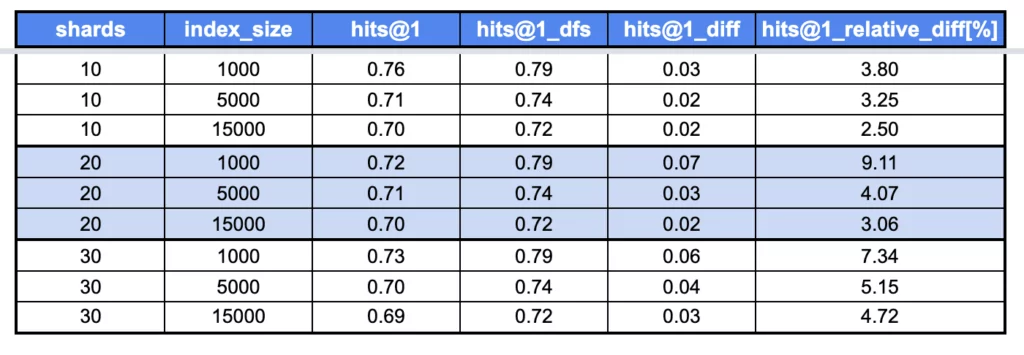

In the table above, you can observe that the impact of the shards (a relative difference between DFS and non-DFS scores) is lower the more documents are indexed. Hence, the problem is less painful when working with more extensive collections of texts. However, in such a case, it is more probable that we would require more shards due to time performance. When it comes to smaller indices, we recommend setting the shards’ number to the default value of one and not worrying too much about the shards effect too much.

Idea 3: Check the impact of different scoring functions

BM25 is a well-established scoring algorithm that performs great in many cases. However, if you would like to try out other algorithms and see how well they do in your language domain, Elasticsearch allows you to choose from a couple of implemented functions or to define your own if needed.

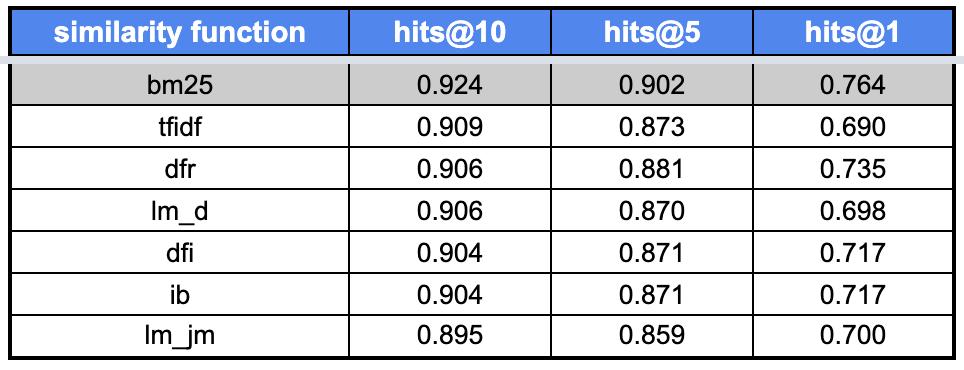

Even though we do not recommend starting optimization by changing the scoring algorithm, the possibility remains open. We would like to present results on SQUAD 10k with the use of the following functions:

- Okapi BM25 (default),

- DFR (divergence from randomness),

- DFI (divergence from independence),

- IB (information-based),

- LM Dirichlet,

- LM Jelinek Mercer,

- a custom TFIDF implemented as a scripted similarity function.

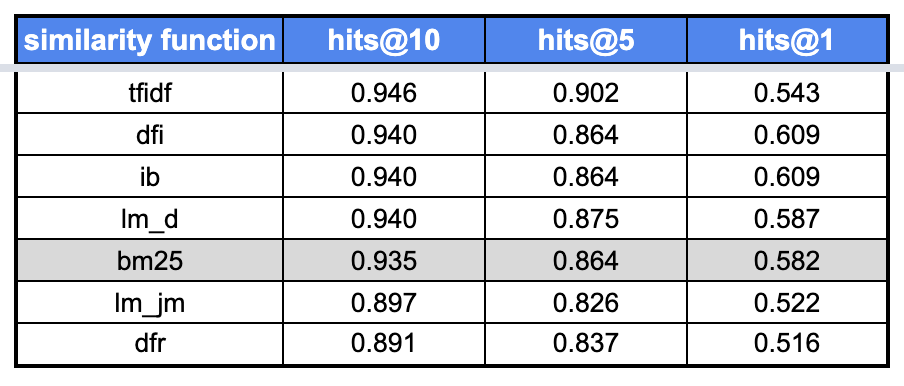

As you can see in the case of the limited SQUAD, the BM25 turned out to be the best-performing scoring function. However, when it comes to SWIFT UI, slightly better results can be obtained using the alternative similarity scores, depending on the metric we care about.

Idea 4: Tune Okapi BM25 parameters

Staying on the scoring topic, there are a couple of parameters the values of which can be changed within the BM25 algorithm. However, as in the case of choosing other scoring functions, we again do not recommend changing the parameters as the first steps of optimization.

The default values for parameters are:

- b – term frequency normalization coefficient based on the document length,

- k1 – term frequency non-linear normalization coefficient

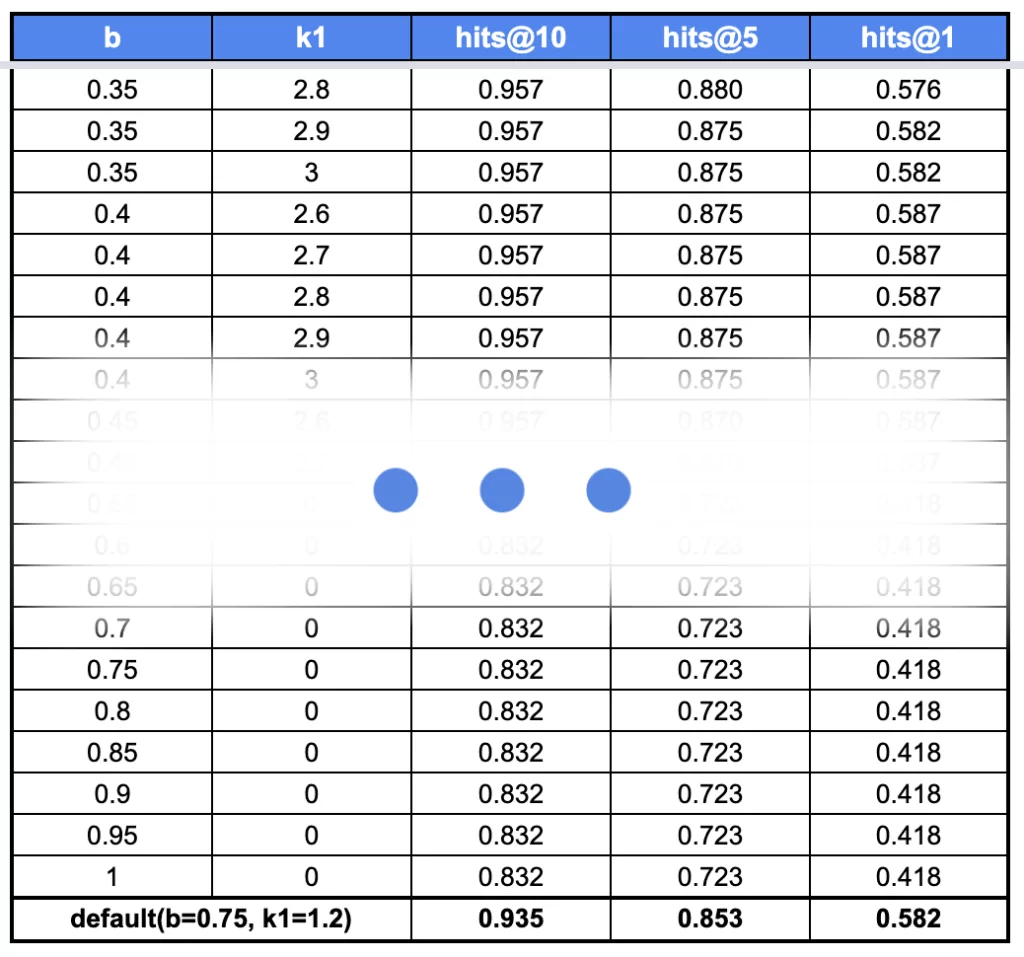

They usually perform best across multiple benchmarks, which we’ve confirmed as well in our tests on SQUAD.

Keep in mind that despite the defaults being considered most universal, it doesn’t mean you should ignore other options. For example, in the case of the SWIFT UI dataset, other values performed better by 2% on the top 10 hits.

In this case, the default parameters turned out to be again the best for SQUAD, while SWIFT UI would benefit more from other ones.

Idea 5: Add extra data to your index with custom filters

As already mentioned, there are plenty of options in NLP, which text can be enriched with. We would like to show you what happens when we decide to add synonyms or other word derivatives like phonemes.

For the implementation details, we once again encourage you to have a glimpse at our repository.

Synonyms

Wondering how to make our documents more verbose or easier to query, we may try to extend the available wording used for document descriptions. However, this must be done with great care. Blindly adding more words to documents may lead to loss of their meaning, especially when it comes to the longer texts.

Automatic – WordNet synonyms

It is possible to automatically extend our inverted index with additional words, using synonyms from the WordNet synsets. Elasticsearch has a built-in synonyms filter that allows for an easy integration.

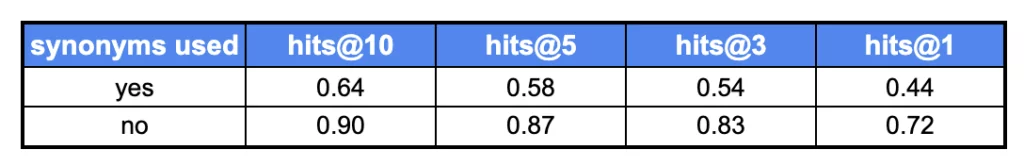

Below, we’ve presented search results on both SQUAD and SWIFT UI datasets with and without the use of all available synonyms.

As can be seen, using automatic, blindly added synonyms reduced the performance drastically. With thousands of additional words, documents’ representations get overpopulated; thus they lose their original meaning. Those redundant synonyms may not only fail to improve documents’ descriptiveness, but may also harm already meaningful texts.

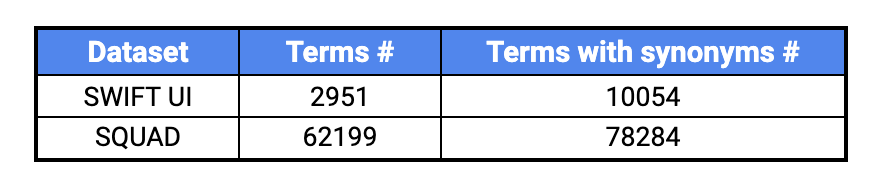

The number of terms in the SWIFT UI dataset has more than tripled when synonyms were used. It brings very negative consequences for the BM25 algorithm. Remember that the algorithm penalizes lengthy texts, hence documents that were previously short and descriptive may now be significantly lower on your search results page.

Meaningful synonyms

Of course, using synonyms may not always be a poor idea, but it might require some actual manual work.

- Firstly, using spaCy, we’ve extracted 50 different Named Entities from the Swift programming language domain used in the SWIFT UI dataset.

- Secondly, we’ve found synonyms for them, manually. As our simulation does not require usage of actual, existing words we have simply used random ones as business entities’ substitutes.

- Finally, we have replaced occurrences of the Named Entities in questions with the selected word equivalents from the previous step, and added a list of the synonyms to the index with the synonym_analyzer.

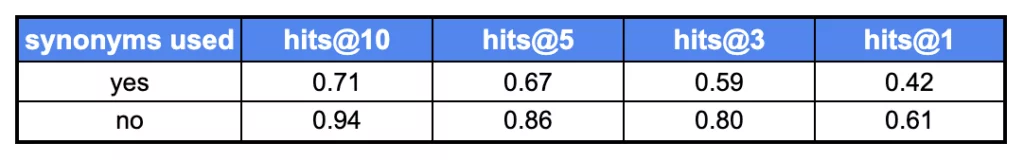

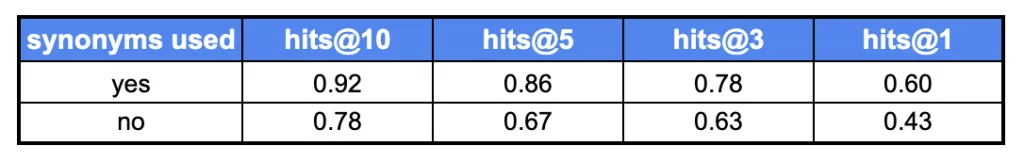

Our intention was to create a simulation with certain business entities to which one can refer in search queries in many different ways. Below you can see the results.

Search performance improves with the use of manually added synonyms. Even though the experiment was carried out on a not too large sample, we hope that it illustrates the concept well – you can benefit from adding some meaningful words’ equivalents if you have proper domain knowledge. The process is time-consuming, and can hardly be automated; however, we believe it to be often worth the invested time and effort.

Impact of phonemes

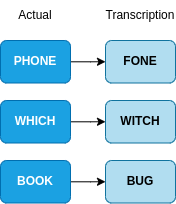

It should be noted that, when working with ASR (automatic speech recognition) transcriptions, many words can be recognized incorrectly. They are often subject to numerous errors in transcription since some phrases and words sound alike. It might also happen that non-native speakers may mispronounce the words. For example:

To use a phonetic tokenizer a special plugin must be installed in the Elasticsearch node.

The sentence “Tom Hanks is a good actor as he loves playing” is represented as:

- [‘TM’, ‘HNKS’, ‘IS’, ‘A’, ‘KT’, ‘AKTR’, ‘AS’, ‘H’, ‘LFS’, ‘PLYN’], when using Metaphone phonetic tokenizer,

and

- [‘TM’, ‘tom’, ‘HNKS’, ‘hanks’, ‘IS’, ‘is’, ‘A’, ‘a’, ‘KT’, ‘good’, ‘AKTR’, ‘actor’, ‘AS’, ‘as’, ‘H’, ‘he’, ‘LFS’, ‘loves’, ‘PLYN’, ‘playing’], when using both phonemes and original words simultaneously.

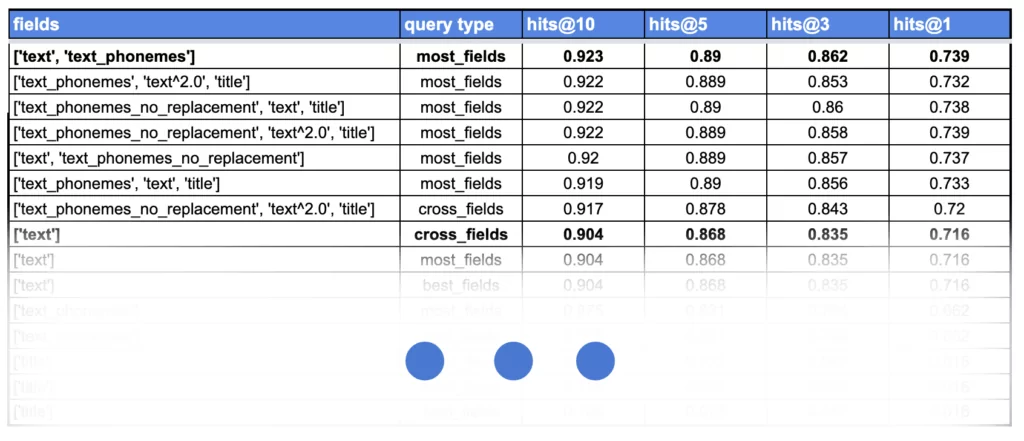

We’ve come to the conclusion that using phonemes instead of the original text in the case of high-quality, non-ASR datasets like SQUAD does not yield much of an improvement. However, indexing phonemes and the original text in separate fields, and searching by both of them, slightly increased the performance. In the case of SWIFT UI the quality of transcriptions is surprisingly good, although the text comes from ASR. Therefore, the phonetic tokenizer is not applicable here as well.

Note: It might be a good idea to use phonetic tokenizers when working with more corrupted transcriptions, when the text is prone to typos and errors.

Idea 6: Add extra fields to your index

You might come up with the idea of putting additional fields to the index and expect them to boost the search performance. In Data Science it’s called feature engineering, or an ability to derive and create more valuable and informative features from available attributes. So, why not try deriving new features from text and index them in parallel as separate fields?

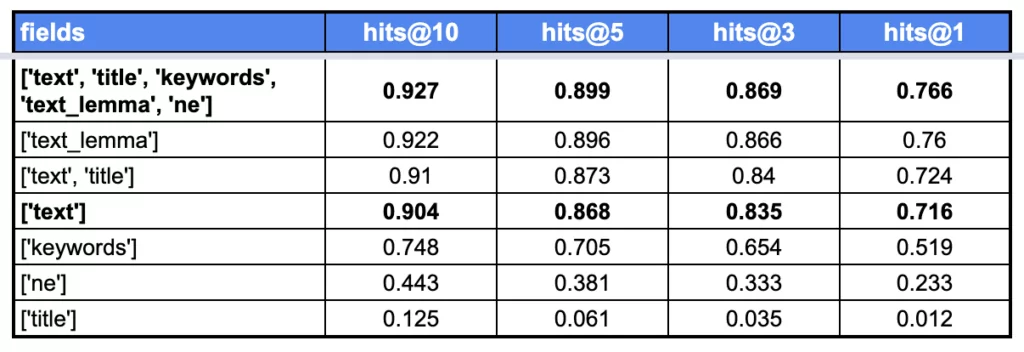

In this little experiment, we wanted to prove whether the above idea makes sense in Elasticsearch, and how to achieve it. We’ve tested it by:

- extracting Named Entities using Transformer-based deep learning models from Huggingface 🤗,

- getting keywords by using the KeyBERT model,

- adding lemmas from SpaCy

Note: The named entities, as well as keywords, are the excerpts already existing in the text but were extracted to separate fields. In contrast, lemmas are additionally processed words; they provide more information than available in the original text.

While we were conducting the experiments, we discovered that, in this case, keywords and NERs did not improve the IR performance. On the contrary, word lemmatization seemed to provide a significant boost.

As a side note, we have not compared the lemmatization with stemming in this experiment. It’s worth mentioning that lemmatization is usually much trickier and can perform slightly worse in relation to stemming. For English, stemming is usually enough; however, in the case of other languages cutting off the suffixes will not suffice.

Based on our experience, we can also say that indexing parts of the original text without modifications, and putting them into separate fields, doesn’t provide much improvement. In fact, BM25 does just fine with keywords or Named Entities left in the original text, and thanks to the algorithm’s formula, it knows which words are more important than others, so there is no need to index them separately.

In short, it seems that fields providing some extra information (such as text title) or containing additionally processed, meaningful phrases (like word lemmas) can improve search accuracy.

Idea 7: Optimize the query

Last but not least, there are numerous options for creating queries. Not only can we change the query type but also we can boost individual fields in an index. Next to analyzer usage, we highly recommend experimenting with this step, as it usually improves the results.

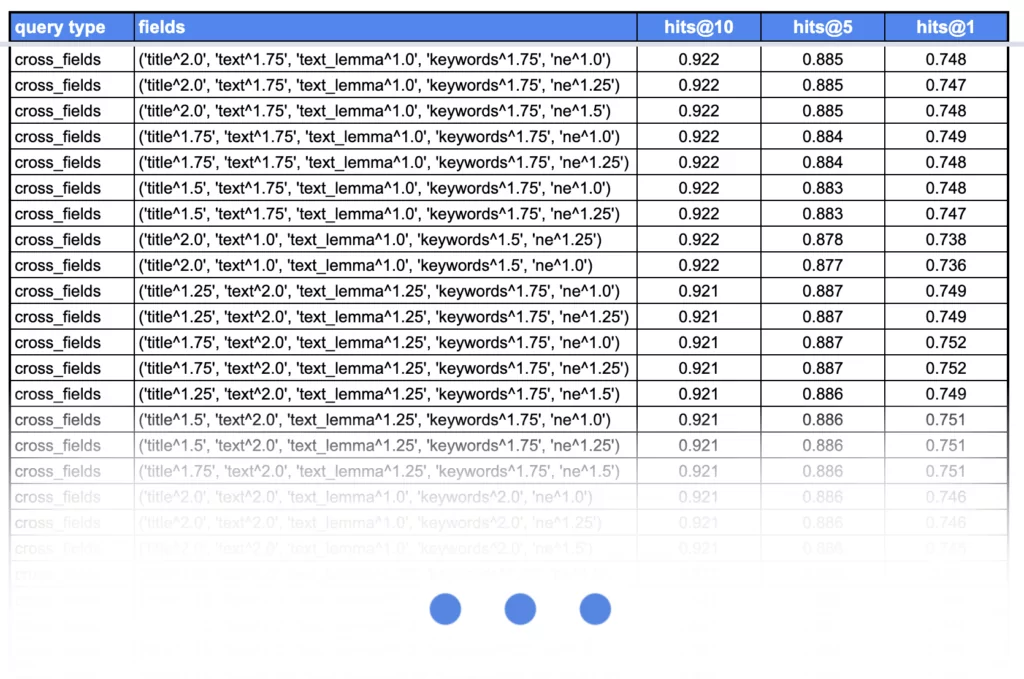

We have conducted a small experiment, in which we have tested the following types of Elastic multi-match queries: best_fields, most_fields, cross_fields, on fields:

- text – original text,

- title – the title of the document, only if provided,

- keywords – taken from KeyBERT,

- NERs – done via Huggingface 🤗 Transformers,

- lemmas – extracted by SpaCy,

Alongside, we have boosted each field from the default value of 1.0 to 2.0 with increments of 0.25.

As it has been proven above, the results on SQUAD dataset, despite being limited, show that queries of cross_field type provided the best results. What should also be noted is that boosting the title field was a good choice, as in most cases, it already contained important and descriptive data about the whole document. We’ve also observed that boosting only the keywords or NER fields gives the worst results.

However, as often happens, there is nothing like one clear and universal choice. When experimenting with SWIFT UI, we’ve figured that the title field is less important in this case, as it is often missing or contains gibberish. Also, when it comes to the query type, while cross_fields usually appears at the top, there are plenty of best_fields queries with very similar performance. In both cases, most_fields queries are usually placed somewhere in the middle.

Keep in mind that it all will most likely come down to analysis per dataset, as each of them is different, and other rules may apply. Feel free to use our code, plug in your dataset and find out what works best for you.

Conclusion

Compared to deep learning Information Retrieval models, full-text search still performs pretty well in plenty of use cases. Elasticsearch is a great and popular tool, so you might be tempted to start using it right away. However, we encourage you to at least read up a bit upfront and then try to optimize your search performance. This way you will avoid falling into a wrong-usage-hole and the attempts to get out of it.

We highly recommend beginning with analyzers and query optimization. By utilizing ready-to-use NLP mechanisms in Elastic, you can significantly improve your search results. Only then, proceed further with more sophisticated or experimental ideas like scoring functions, synonyms or additional fields.

Remember, it is crucial to apply methods appropriate to the nature of your data and to use a reliable validation procedure, adapted to the given problem. In this subject, there is no “one size fits all” solution.