Recent advancements in Artificial Intelligence (AI) led us to a point where AI-based technologies surround us and assist us in many daily routines. Some people may not realize it but AI is already an inherent part of our lives. Just think of how much time you spend on your smartphone which is loaded with AI algorithms. Whenever you take a photo, browse your gallery, or enjoy augmented reality features, AI assists you. Maybe you just scroll through Facebook or Instagram, or look for a new TV show on Netflix or music on Spotify – it is all powered by AI-based recommender systems. And it is not only about leisure activities. AI is already an integral part of automation and robotics, surveillance, e-commerce, agriculture 4.0, and it is also finding its way to many other sectors, like healthcare, human resources, or banking. AI wins with humans in chess, Go, and video games. It even started to replace human work in some areas. We, as individuals and as a society, have become dependent on AI. With the recent progress in deploying machine learning (ML) models on edge devices, we can only expect more and more AI systems around us. Are we ready for this? Are we reaching the technological singularity?

General artificial intelligence

Stephen Hawking once said: “A superintelligent AI will be extremely good at accomplishing its goals, and if those goals aren’t aligned with ours, we’re in trouble”. Fortunately, we have not even reached the level of general artificial intelligence. While some people believe it is not going to happen soon (or ever), many science and technology leaders are not so sure about that. Deep Mind’s researchers have just published “Reward is enough” [1]. They hypothesize that reinforcement learning agents can develop general AI by interacting with rich environments with a simple reward system. In other words, an agent, being driven by one goal – maximizing its cumulative reward – can learn the whole range of skills, which include knowledge, learning, perception, social intelligence, language, and generalisation.

It is likely that general AI is coming sooner or later. Meanwhile, the number of narrow AI solutions is growing exponentially. We need some tools to ensure that AI serves us well. Governments have already started to work on new laws regulating AI. Just this April European Commission released the Artificial Intelligence Act [2] to regulate AI technologies across the European Union.

The ethics of AI

At the same time, there is an ongoing global debate on AI ethics. However, it is difficult to define ethical AI. We do not have a precise definition of what is right and wrong – it depends on the context and cultural differences come into play. There are no universal rules that could be implemented in ethical AI systems. Not to mention an extra layer of difficulty it would introduce to the process of building deep learning solutions, which is already a hard task. It is possible that defining ethical AI will progress iteratively over time and – since we cannot predict all possible failures and their consequences – we have to make mistakes and hopefully learn from them. In any case, there are some measures we – as the community of AI developers – can take to ensure the quality, fairness, and trustworthiness of our software.

The important aspects of ML systems development

In a data-driven AI systems development, it is extremely important to understand and prepare data used to build a model. This is a necessary step to properly choose a methodology and create a reliable system. Moreover, this is required to minimize the effect of passing past prejudices and bias hidden in data into the final AI system. While it is tempting to start a project with prototyping deep learning algorithms it is crucial to first fully understand the data and underlying problems. If necessary domain experts should be involved in the process. It is also important, as for any software application, that proper security measures are applied to protect the data.

The next step in data preparation is a choice of training, validation, and test sets. Cross-validation is a commonly used technique to split a dataset into these three groups, and to verify the ability of a model to generalize for new samples. It has to be noted that while this method is widely adopted it has one drawback – there is a silent assumption that data is independent and identically distributed (the i.i.d. assumption). In most real-world scenarios i.i.d. does not hold. It does not mean that a cross-validation method should not be used, but AI developers must be aware of that to properly predict possible failures.

There is another well known problem related to the i.i.d. assumption, called domain or distribution shift. In short, it means that a training dataset is drawn from different distributions than real data used to feed an AI system after deployment. For example, a model trained solely on stock images may not work when it is later applied to users’ photos (different lighting conditions, quality, etc.) or an autonomous car which is learned how to drive during the daytime may not be able to perform this flawlessly at night. It is important that AI developers take into account that their model may fail in real life even if it works perfectly “in the lab”. And if possible, to use one of domain adaptation techniques to minimize the effect of distribution shift.

The right choice of metric is also crucial for an AI system development. Commonly used accuracy may be a good option for some image classification tasks, but it will fail to correctly represent the quality of a model for an imbalanced dataset. In such cases, F-score (the harmonic mean of precision and recall) is preferred. MAPE (Mean Absolute Percentage Error) is often chosen for regression tasks. However, it penalizes negative errors (prediction higher than true value) more than positive ones. If this is not desirable, sMAPE (symmetric MAPE) can be used instead. AI developers have to understand the advantages and shortcomings of metrics to choose the one adequate for a problem being solved.

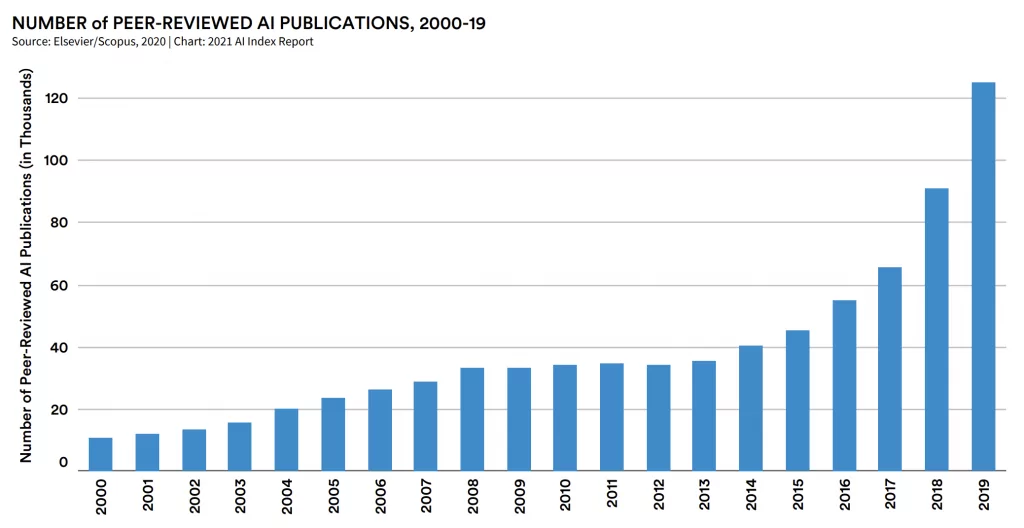

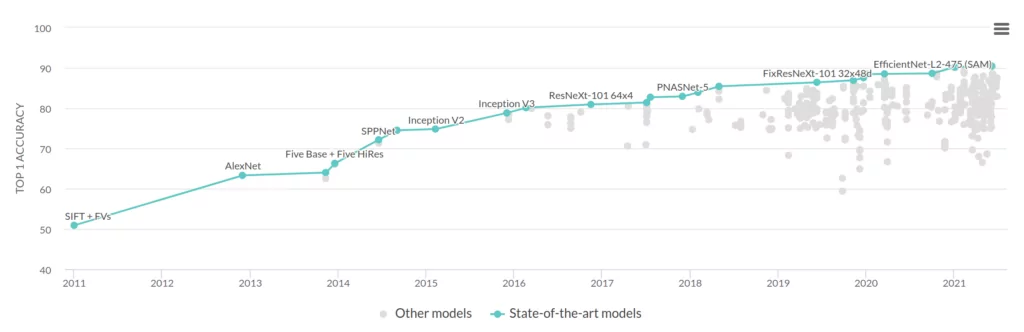

Finally, one has to select an appropriate model for the task. There are thousands of AI publications every month (Fig 1). A lot of algorithms are proposed and AI developers have to choose the right one for a particular problem. It is hard to read every paper in the field, but it is necessary to at least know the state-of-the-art (SOTA) models and understand their pros and cons. It is important to follow new trends and to be aware of all groundbreaking approaches. Sometimes it is a matter of a few weeks for a model to lose its SOTA status (Fig 2).

Understanding AI methods

Many frameworks have been released to accelerate the ML development process. Libraries like PyTorch or TensorFlow allow for quick prototyping and experimenting with various models. They also provide tools to make the deployment easy. Recently, AutoML (Automated Machine Learning) services, which allow non-experts to play around with ML models, have gained popularity. This is definitely a step forward to spread ML-based solutions across many different fields. However, choosing the right methodology and its deep understanding is still crucial to make a reliable AI system.

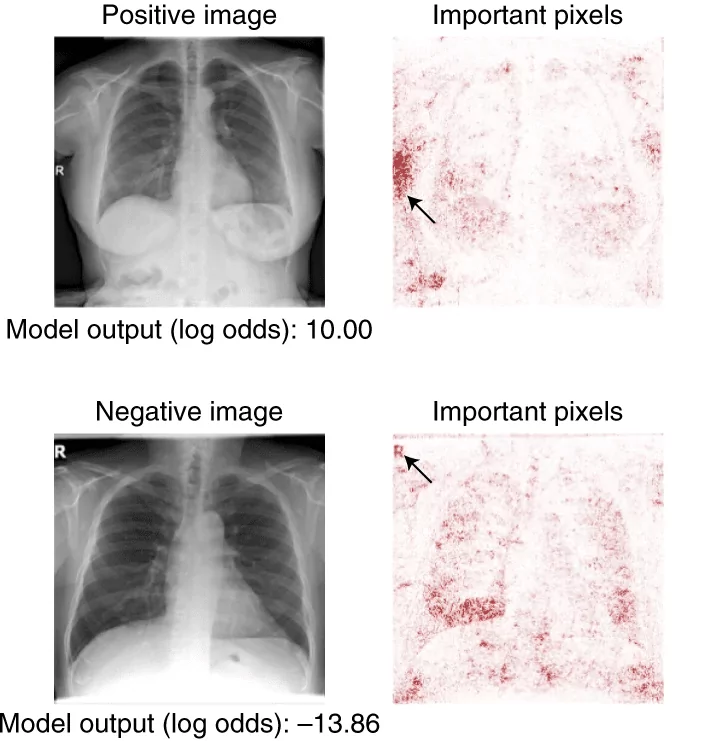

Regardless of the tool used, all the above aspects of the ML development process have to be considered carefully. It is important to remember that AI is goal-oriented and it may be misleading about its real performance. The researcher from the University of Washington reported how the shortcuts learned by AI may trick us that it knows what it is doing. Multiple models were trained to detect COVID-19 in radiographs and they performed very well on the validation set, which was created from the data acquired in the same hospital as the training set. However, they completely failed when applied to X-rays coming from a different clinic. It turned out that the models learned to recognize irrelevant features, like text markers, rather than medical pathology (Fig 3).

Towards reliable and trustworthy AI

On the one hand, machine learning is now more accessible for developers and many interesting AI applications arise. On the other hand, people’s trust in AI grows inversely to the number of unreliable AI systems. It may take years before international standards arrive to certify the quality of AI-based solutions. However, it is time to start thinking about this. Recently, TUV Austria in collaboration with the Institute of Machine Learning at Johannes Kepler University released a white paper on how AI and ML tools can be certified [6]. They proposed a catalog to be used for auditing ML systems. At the moment, the procedure is provided only for supervised learning with a low criticality level. The authors list necessary requirements an AI system must meet and propose a complete workflow of the certification process. It is a great starting point to extend this in the future for other ML applications.

AI is everywhere. At this point, it “decides” what movie you are going to watch this weekend. In the near future, it may “decide” if you get a mortgage or about your medical treatment. The AI community has to make sure that the systems they are developing are reliable and fair. The AI developers need to have a comprehensive understanding of the methods they apply and data they use. Necessary steps must be taken to prevent prejudice and discrimination from data to be passed to AI systems. Fortunately, many researchers are aware of that and put a lot of effort into developing adequate tools, like explainable AI, to help create AI we can trust.

References

[1] Silver, David, et al. “Reward is enough.” Artificial Intelligence (2021): 103535.

[3] Artificial Intelligence Index Report

[4] Papers with Code

Project co-financed from European Union funds under the European Regional Development Funds as part of the Smart Growth Operational Programme.

Project implemented as part of the National Centre for Research and Development: Fast Track.