At NeuroSYS we are currently working on user localization for our AR platform called nsFlow, which enables factory workers to do their duty by displaying instructions to them through smart glasses, so no prior training or supervision are needed. As we explained in our previous post, knowledge about the employee’s location is crucial to ensure proper guidance and safety in the factory.

To solve the user localization problem we utilized algorithms from the Visual Place Recognition (VPR) field.

In the first part of this series, we provided a general introduction to VPR. Today we would like to present the solution we came up with for nsFlow.

As stated in our last post, VPR is concerned with recognizing a place based on its visual features. The recognition process is typically broken down into 2 steps. First, a photo of the place of interest is taken and keypoints (regions that stand out in some way and are likely to be also found in other images of the same scene) are detected on it. Next, they are compared with keypoints identified on the reference image and if the 2 sets of keypoints are similar enough, the photos can be considered as representing the same spot. The first step is carried out by a feature detector and the second step is performed by a feature matcher.

But how can this be applied to user localization?

Since we didn’t need the exact location of the user and we only wanted to know in which room or at which workstation he/she was staying, the problem can be simplified to place recognition. To that end, we used algorithms belonging to the group of VPR. Specifically, we focused on Superpoint and Superglue, which are currently state-of-the-art in feature detection and matching. Additionally, we applied netVLAD for faster matching.

So much for the reminder from the last post. Now let’s move on to the most interesting part of this series, which is our solution.

Databases

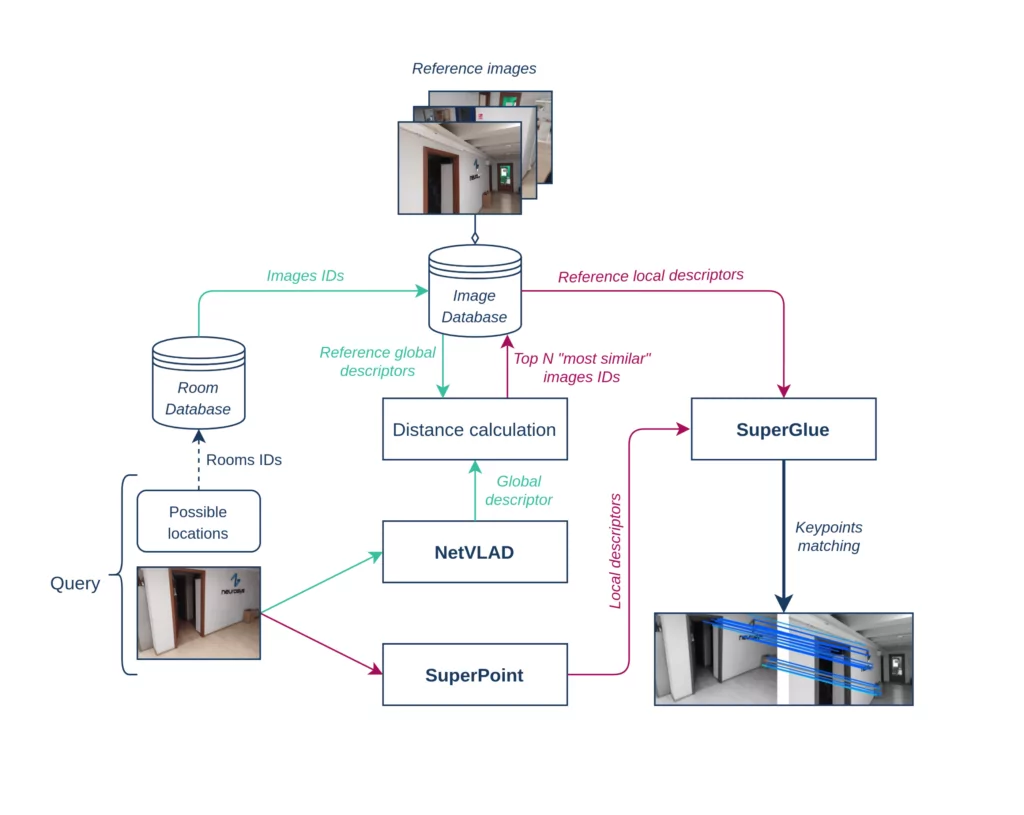

As you can see in the graph above our system contains two databases:

- an image database;

- a room database.

The role of the first one is to store the image of each location (possible workstation or room), as well as several additional properties, namely:

- a unique identifier;

- images global descriptors;

- images keypoints and local descriptors.

The room database associates each unique identifier with a room. A structure like this allows the system to be distributed between local machines (room database) and a computational server (image database), thus increasing the robustness and performance. Let’s now take a closer look at some of the image properties.

Keypoints detection and matching

As stated above, a VPR system needs a feature detector to identify keypoints and a feature matcher to compare them with the database and choose the most similar image. Each keypoint contains its (x, y)^T coordinates and a vector describing it (called the descriptor). The descriptor identifies the point and should be invariant to perspective, rotation, scale and lighting conditions. This allows us to find the same points on two different images of the same place (finding the pairs of keypoints is called matching).

In our case we used a deep neural network called SuperPoint to detect keypoints. We chose it over classical methods of computing features, because it is able to extract more universal information. The other advantage of selecting SuperPoint is the fact that it performs better in tandem with the feature matching deep neural network named SuperGlue compared to other keypoint extractors.

SuperGlue also shows improved robustness in comparison to classical feature matching algorithms. In order to use it we needed to implement the network from scratch based on this paper. This was a challenge in itself and might be a topic of a future article. With our implementation we achieved results similar to those from the paper. The image below exemplifies how our network performs.

Even though SuperPoint and SuperGlue work at around 11 FPS (2x NVIDIA GeForce RTX 2080 Ti), calculating the matches for all images from the database would be ineffective and introduce high latency in the localization system. To solve this problem we added one step before local feature matching allowing us to roughly estimate the similarity and further process only the frames that are the most promising. Here we introduce the concept of global descriptors and their matching.

Global Descriptors and matching

In order to roughly estimate the similarity between two images we use global descriptors. They take the form of a vector that uniquely identifies the scene in a global sense. Here are some properties that the global descriptor should have:

- it should be invariant to the point of view – the same scene viewed from different perspectives should have global descriptors that are near each other in the vector space;

- it should be invariant to lighting conditions – the same scene viewed at different times of the day and under different weather conditions should have similar global descriptors;

- it should be insusceptible to temporary objects – the descriptor should not encode information about cars parked in front of the building or people walking by, but only information about the building itself.

In our case we used a deep neural network named NetVLAD to calculate the global descriptors. The network returns a vector that has all the aforementioned properties.

Similarly to brute-force local descriptor matching we calculate the distances between one descriptor and all others. Then we further process the images of the top N “most similar” (closest) descriptors. This process can be called global descriptor matching.

Combining all parts together

So far we have explained the basic concepts upon which our solution is built and introduced neural networks that we used. Now is the time to combine these blocks into one working system.

As mentioned previously there exist two databases: one associating each image’s identifier with a room (this for simplicity is called the room database) and one storing more complex information about the image (keypoints and global descriptors). In order to localize the user, a query with an image of the current view is sent to the localization system. The server first calculates necessary information about the new image – its global descriptor and keypoints. Next, it performs a rough estimation of the similarity by calculating the distances between the global descriptors of the query image and images in the database. Subsequently, N records corresponding to the shortest distances are chosen and processed further by SuperGlue, which compares keypoints detected on the query image with keypoints identified on N chosen images from the database. Finally, user location is determined based on the number of matching keypoints.

That’s all we wanted to show you about VPR and our user localization system. We hope you found it interesting. In the next and last part of this series we will present how our localization system works in practice. Feel free to leave comments below if you have any questions. Stay tuned to read on!

If you want to find out more about nsFlow, please visit our website.

Do you wish to talk about the product or discuss how your industry can benefit from our edge AI & AR platform? Don’t hesitate to contact us!

Project co-financed from European Union funds under the European Regional Development Funds as part of the Smart Growth Operational Programme.

Project implemented as part of the National Centre for Research and Development: Fast Track.