We wanted to get familiar with Reinforcement Learning (RL), which is one of the three paradigms of machine learning. It aims to create an agent controlling the given environment (by taking actions within it) to reach some defined goal. It has a variety of applications, including robotics, control tasks, and playing computer games. The last one is a fun way to get started with RL and the Obstacle Tower is a great environment to experiment with.

So we’ve decided to try our hands at a new challenge from Unity announced on February on aicrowd.com – Unity Obstacle Tower Challenge.

Below we present the description of the challenge, introduction to RL and the results that we obtained in the first round.

Unity Obstacle Tower Challenge

The Obstacle Tower is a procedurally generated environment from Unity, intended to be a new benchmark for artificial intelligence research in reinforcement learning. The goal of the challenge is to create an agent that can navigate the Obstacle Tower environment and reach the highest possible floor before running out of time [1].

Below you can watch the video from the official webpage of the challenge:

Introduction to reinforcement learning

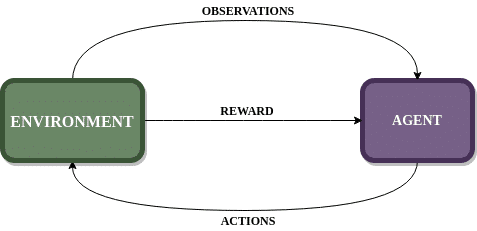

RL is an area of machine learning that most closely resembles the way humans learn. It is concerned with an agent interacting with the environment to achieve a goal. Such an agent can sense the state of the environment and take actions to affect it. It is also rewarded for its actions. The agent has to learn to achieve a goal from its own experience – by taking actions and observing a resulting state of the environment and obtained rewards.

One of the challenges in RL is so-called trade-off between exploration and exploitation. To obtain the biggest reward, an agent has to take actions, that were found to be the most effective in the past, but in order to find them, an agent has to try out new actions. Exploration is about gathering more information from the environment (trying out new actions) and exploitation is using only already known information to maximize the reward (taking actions proven to lead to the biggest reward). By pursuing exclusively exploration or exploitation agent most probably won’t succeed at the task. Instead, it has to find a kind of trade-off between them, namely explore at the beginning and with time and experience an agent can favour most effective actions.

The other challenge in RL is the fact, that an agent does not always get an immediate reward. Most often it has to make multiple actions to be rewarded. In more complicated scenarios the agent may have to learn to make sacrifices – give up smaller rewards in order to gain a big one later [2].

Interaction between the agent and the environment is presented schematically below:

In case of our challenge, the obstacle tower is the environment and the player is an agent. The game consists of multiple levels, each one built from several rooms. The agent has to pass through the doors in order to enter the next room, and the last door gives him access to the next level. There are a few different types of doors. Some of them are already opened, but others must be opened by the agent by either using a key or solving a puzzle. The keys must be collected by the agent on the way. The agent may also get extra time by collecting orbs. It is rewarded positively every time he reaches a door or collects an item. The goal of the game is to reach the highest floor of the tower before running out of time.

Many algorithms have been developed in the area of RL. For the task, we decided to apply Deep Q-Learning (DQN) with multiple improvements called RAINBOW [3].

The main idea behind this algorithm and the results that we obtained with it are presented below.

Q-Learning

In Q-Learning, first we have to define the action space, for example move left, move forward and move right. Next, Q-Table is created, where Q-Values for each state-action pair are stored. Q-Value represents the maximum expected future reward given an action and a state.

While interacting with the environment, the agent decides which action to choose based on Q-Table. Higher Q-Values correspond to greater expected reward in the future. In the beginning, the agent explores the environment by choosing random actions. With experience, it starts to exploit its knowledge and pursues actions corresponding to the biggest Q-Values. During the game, Q-Table is updated based on the reward and the maximum Q-Value in the next state.

Deep Q-Learning (DQN)

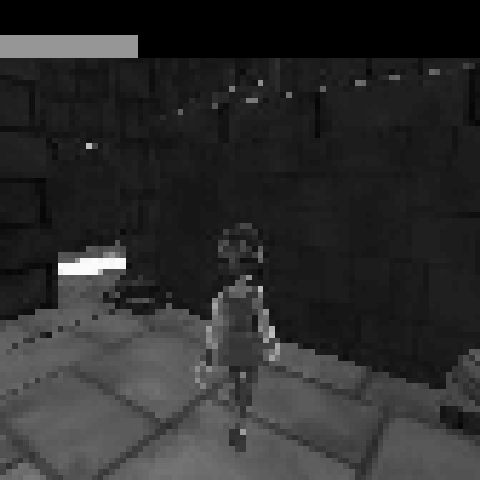

Creating and updating Q-Table is inefficient for environments with big state space, such as the Obstacle Tower. In Obstacle Tower environment, the state is simply three consecutive screenshots from the game concatenated together. Neural networks can be used in order to approximate Q-values for each action-state combination. In this case convolutional neural networks (CNN) are applied, as they are known to be working well on data with grid-like topology (as e.g. images), to predict Q-Value of each possible action based on the screenshots.

Below the resized screenshot that is used to train the CNN is presented:

To train the CNN, the agent collects experience and stores the transitions in memory. The transition consists of the state (three consecutive screenshots), an action that the agent took, obtained reward and the resulting state. The agent samples transitions from the memory and based on them the parameters of the network are updated to give a better approximation of Q-Values.

Results

We managed to reach the 5th floor of the Obstacle Tower, what put us on the 22nd place (out of 97 submissions) in the first round of the challenge. Below you can see how our agent navigates the environment:

The Obstacle Tower is a procedurally generated environment so different rooms vary in terms of texture, lighting and object geometry. This means, that the agent has to learn to cope with varying conditions in order to succeed at the task. So far we trained the agent that can reach the 5th floor of the tower. It requires locating the opened doors and going through them to the next rooms. On the 5th floor new type of door appears, namely the locked door, to open which the key is needed. Our agent does not realize, that it has to collect the key since it has not experienced it before and thus cannot pass. We want to experiment more with DQN as well as try out other algorithms to train the agent with more capabilities.

References

[1] Juliani, Arthur, et al. “Obstacle Tower: A Generalization Challenge in Vision, Control, and Planning.” arXiv preprint arXiv:1902.01378 (2019).

[2] Sutton, Richard S., and Andrew G. Barto. Reinforcement learning: An introduction. MIT press, 2018.

[3] Hessel, Matteo, et al. “Rainbow: Combining improvements in deep reinforcement learning.” Thirty-Second AAAI Conference on Artificial Intelligence. 2018.