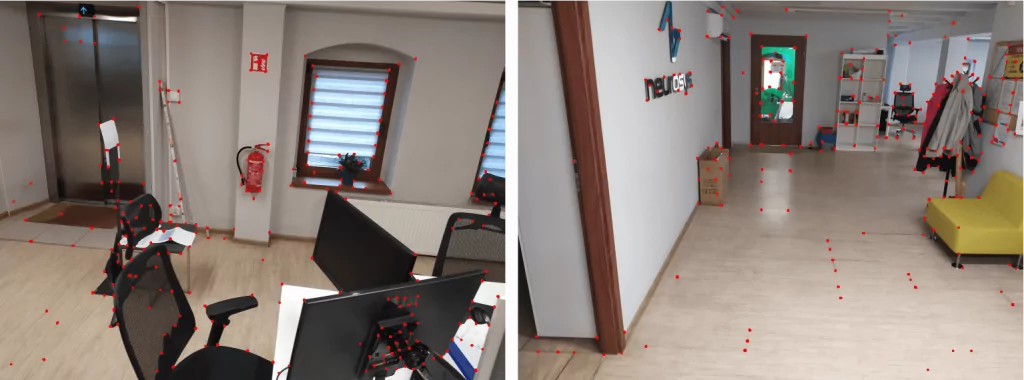

Currently at NeuroSYS we are working on a very exciting project – our AI & AR platform for industrial processes – called nsFlow, which enables unassisted training of factory workers. Through our platform users equipped with smart glasses are displayed specific instructions appropriate to their workplace and activities to be performed. Thanks to this, they do not require prior training and supervision, which significantly speeds up and facilitates the onboarding process for both the employee and the employer.

One problem we had to tackle was user localization. It was of great importance for two main reasons. Firstly, knowing where an employee is located is crucial to ensure safety in the factory. Since newcomers being trained are not familiar with the workplace, there exists the risk that they would unwittingly abandon it or undertake some dangerous action. Making sure they are in the right place or warning them against the dangers lurking at various positions significantly increases their safety and helps to avoid accidents. Secondly, knowing the user location allows to choose appropriate training for the workplace. Employees might expect different instructions depending on the place they are working in. Choosing it manually on the device is highly impractical and sometimes can introduce threats. All in all, knowing where the user is located can significantly improve guidance, user experience and safety.

To solve the problem of user localization we utilized algorithms belonging to the field of Visual Place Recognition (VPR).

In this three-part blog series, we would like to introduce you to the exciting world of VPR. We will review the existing solutions and show the cutting-edge technology at the intersection of industry-used augmented reality and the latest research in computer vision that we applied in our positioning system created for nsFlow.

Indoor Positioning Systems

As mentioned before, our aim was to localize the user. As the product is primarily intended for indoor use and therefore GPS-based positioning might not be reliable, we focused on IPS (indoor positioning system) techniques. Let’s look at some of the many existing solutions:

- Wi-Fi-based positioning – utilises existing network infrastructure such as wireless access points to measure signal strength and estimate position,

- Bluetooth beacons – uses low energy devices that transmit their identifier to a nearby smartphone or AR glasses,

- Magnetic positioning – is based on detecting variations of Earth’s magnetic field and using it to create the database of fingerprints,

- Positioning based on visual markers – uses easily recognizable markers (e.g. QR codes, ArUco markers) spread out in the search space.

In our use case, each method mentioned above offers sufficient precision, but we also have noticed their shortcomings:

- the necessity to set markers or beacons,

- the need to create the database of fingerprints or installing additional access points,

- demanding deployment process – in most scenarios we need physical access to the target space and that involves travelling and working on-site.

While wondering how to overcome the above-mentioned difficulties, we realized that in our case they can be avoided altogether. After all, we didn’t need to know the exact location of the user. We were only interested in the workplace or room he/she was in. We came up with a solution based solely on images utilizing VPR algorithms without having to travel and set up markers.

We will present our method for user localization in the next part of this series. Today let’s briefly review some existing VPR algorithms.

Visual Place Recognition

VPR is concerned with recognizing a place based solely on its visual features, e.g. shapes and colors. It is typically done by estimating the similarity between ground truth and query image. There exist a broad range of computer vision algorithms that tackle this problem. They can be divided into two categories: classical, hand-crafted VPR and learned VPR.

Classical VPR

Prior to the huge deep learning boom of the 2010s, most solutions to VPR used carefully designed feature detectors and matchers. Firstly, the feature detector finds keypoints in the image that are likely to be also found in other images of the same scene. These points of interest are simply regions that stand out in some way and thus are easily distinguishable. The next step is to create a feature descriptor, which is a compact representation of the region around the keypoint. One method of vectorizing image data would be to use pixel colors. Would it work? Most probably yes. Would it reliably compare different image areas? Not really. By relying on color only, we are ignoring a lot of valuable information, e.g. shape or texture of keypoints. By taking these properties into account, more sophisticated descriptors can be obtained. Lastly, the feature matcher checks whether the image pair contains the same keypoints, based on the similarities between feature descriptors. The main strength of this kind of techniques is that they are relatively fast and easy to use, but they often lack the robustness required in large-scale applications.

Learned VPR

Lately, learned methods based on deep learning have revolutionized the field of computer vision. When it comes to VPR, deep learning is applied to build useful keypoint detectors and descriptors instead of hand engineering the suitable solution.

It is convenient, no doubt, but as with every solution it does not come without drawbacks. Deep neural networks have black-box nature, so if you are obligated to explain the model’s decision making process, then you will struggle much more than if you were using classical approaches. We haven’t yet dived into details, but you might have built some intuition based on a brief discussion of “classical” descriptors provided above. No matter if an algorithm calculates color gradients, motion trajectory, detects edges or does some other fancy stuff – the important thing is that the operation is clearly defined. If we know how the algorithm works, then we can try to investigate it. How about deep neural networks? Here we have literally no idea what happens inside. Of course we can try to comprehend what the network focuses on and there exist methods for that, but we will not be examining them today. Explainable Deep Learning is an active research field, so if you are interested we encourage you to explore it on your own or check out our previous blog posts here or here. The next challenge we have to overcome is data labeling. In learnable matching the network needs supervision, so we need to explicitly determine correspondence points. Gathering ground truth annotations is a complicated task, as we need to know where exactly the camera was at the moment of taking the picture. Nonetheless, we did not come up with those difficulties to discourage you from using deep learning. On the contrary, we believe that deep neural networks are a great tool and we have been using them successfully.

In our research, we focused on Superpoint and Superglue models, which are currently state-of-the-art in feature detection and matching.

That’s it for today. In the next part of this series we will present our solution for user localization. Feel free to leave comments below if you have any questions. Stay tuned to read on!

If you want to find out more about nsFlow, please visit our website.

Do you wish to talk about the product or discuss how your industry can benefit from our edge AI & AR platform? Don’t hesitate to contact us!

Project co-financed from European Union funds under the European Regional Development Funds as part of the Smart Growth Operational Programme.

Project implemented as part of the National Centre for Research and Development: Fast Track.